DynamoDB

If you’ve just landed here, we’re doing a “Become a Cloud Architect” Unicorn Workshop by building a Unicorn Pursuit Web App step by step, and you’re more then welcome to join!

About DynamoDB

DynamoDB is Serverless Database, a fully managed NoSQL database service that provides FAST and predictable performance with seamless scalability.

DynamoDB is stored on SSD storage, and always spread across 3 Data Centers. DynamoDB gives two options:

- Eventually consistent reads, meaning that the consistency is reached within a second.

- Strongly consistent reads, where the result is only returned when all 3 copies are OK.

For DynamoDB you are billed for your hourly provisioned capacity (read and write), so if you need to auto-scale - you’re not paying for the auto scaling, you’re paying for the provisioned capacity in each moment. Apart from this, you pay for indexed data storage.

The elements of DynamoDB are:

- Tables

- Items (similar to a ROW in RDS)

- Attributes (similar to COLUMN in RDS)

- Supports Key-Value (Key=Name, Value=Data) and Document Data

- Documents can be written in HTML, JSON or XML

When creating DynamoDB table, you need a Primary Key, which is used to store and retrieve data. There are 2 types of Primary key:

- Partition Key or Hash Keys, which is a unique attribute, like Application ID or User ID.

- Composite Key, which is a combination of Partition Key and Sort Key or Range Attribute, which is not required, but can also only hold one value. This is used if Partition Key is not necessarily unique.

DynamoDB Secondary Indexes

Indexes allow us to query non-primary attributes, meaning - specific columns. You would select which attributes you want fast queried. There are 2 types of Secondary Indexes:

- LSI (Local Secondary Indexes) can only be created when creating the table, shares the same Partition Key but different sort key, and gives you a choice for Sort Keys for DynamoDB table. LSI Primary Keys are composite (partition + sort key). Remember, LSI must be provisioned at the same time when the table is created.

- GSI (Global Secondary Indexes) are MUCH more flexible. GSI act as another way to query the info, while having different partition AND different sort key. You can create GSI later, and use different Partition and Sort key if you want.

Code

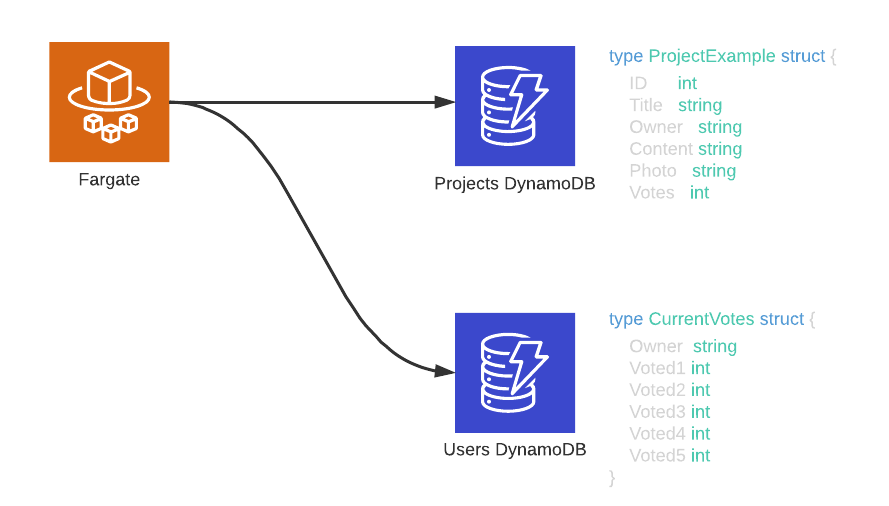

Before we start with the configuration, let’s understand what we’re building. Refer to the diagram below:

We need to create a DynamoDB table to store the following data (code from Go quoted):

type project struct {

ID int `json:"id"`

Title string `json:"title"`

Owner string `json:"owner"`

Content string `json:"content"`

Photo string `json:"photo"`

Votes int `json:"votes"`

}

First we need to decide our Primary Key. We can design our app so that Project ID is unique, we can use it as Primary Key, but since it’s a voting database, having in mind how we will query it - we can add the “owner” as the Sort Key.

Ok, so now we need to consider Items. Items are equivalent to ROWS in Relational Databases, meaning - a unique set of attributes. However, we should first create a Table only with Attribute Definitions of our Partition and Sort Key, and then later add additional Attribute Definitions, such as:

ddb.CfnTable.AttributeDefinitionProperty(attribute_name="title",attribute_type="S"),

ddb.CfnTable.AttributeDefinitionProperty(attribute_name="content",attribute_type="S"),

ddb.CfnTable.AttributeDefinitionProperty(attribute_name="photo",attribute_type="S"),

ddb.CfnTable.AttributeDefinitionProperty(attribute_name="votes",attribute_type="N"),

In AWS CDK we would first need to import DynamoDB, so with the already imported s3:

from aws_cdk import (

aws_s3 as s3,

aws_dynamodb as ddb,

core

)

And then provision an object, which would look like this (notice that we are NOT definiing additional Attirbutes: title, content, photo and votes… we will add them later from Go AWS SDK):

# Create DynamoDB Table for Project Info (ID, Owner, Content, Photo and Votes)

voting = ddb.CfnTable(

self, "DynamodbUnicornVoting",

table_name="DynamodbUnicornVoting",

key_schema=[

ddb.CfnTable.KeySchemaProperty(attribute_name="id",key_type="HASH"),

ddb.CfnTable.KeySchemaProperty(attribute_name="owner",key_type="RANGE"),

],

attribute_definitions=[

ddb.CfnTable.AttributeDefinitionProperty(attribute_name="id",attribute_type="N"),

ddb.CfnTable.AttributeDefinitionProperty(attribute_name="owner",attribute_type="S"),

],

provisioned_throughput=ddb.CfnTable.ProvisionedThroughputProperty(

read_capacity_units=5,

write_capacity_units=5

)

)

At the moment, we won’t manage IAM, but we will come back here later, and add that part. It will look something like this:

voting.grant_read_write_data(fargate_unicorn)

Let’s activate our environment using source .env/bin/activate before deploying the stack, and see what happens. Apparently, it all looks fine, the cdk synth creates a CloudFormation template, that creates the following DynamoDB Resource:

(.env) ➜ iac git:(dev) ✗ cdk ls

UnicornIaC

(.env) ➜ iac git:(dev) ✗

(.env) ➜ iac git:(dev) ✗

(.env) ➜ iac git:(dev) ✗ cdk diff

Stack UnicornIaC

Resources

[+] AWS::DynamoDB::Table UnicornDynamoDBVoting UnicornDynamoDBVoting

(.env) ➜ iac git:(dev) ✗ cdk synth

Resources:

[I'll skip the S3 part, thats old news]

UnicornDynamoDBVoting:

Type: AWS::DynamoDB::Table

Properties:

KeySchema:

- AttributeName: id

KeyType: HASH

- AttributeName: owner

KeyType: RANGE

AttributeDefinitions:

- AttributeName: id

AttributeType: "N"

- AttributeName: owner

AttributeType: S

ProvisionedThroughput:

ReadCapacityUnits: 5

WriteCapacityUnits: 5

TableName: UnicornDynamoDBVoting

Tags:

- Key: project

Value: unicorn

- Key: bu

Value: cloud

- Key: environment

Value: prod

Metadata:

aws:cdk:path: UnicornIaC/UnicornDynamoDBVoting

CDKMetadata:

Type: AWS::CDK::Metadata

As you can see, due to our global settings in app.py, AWS Tags are added to all resources.

Once we do cdk deploy, we should see the resources being successfuly created:

Deep Dive

You can use SQS to optimize write, and Elastic Cache to optimize read.

DynamoDB Access Control:

- AAA is managed by IAM.

- I’d recommend creating a Role which enables obtaining temporary access keys, which can then be used to access DynamoDB.

IMPORTANT: Use IAM Conditions to ONLY allow access to specific records. This is done by adding CONDITION to an IAM Policy, to allow access to where Primary Key matches.

You can query your DynamoDB using the AWS CLI, as long as the EC2 has the DynamoDB role, and the command would be something like:

aws dynamodb get-item --table-name "table_name"

DynamoDB Reads - Scans and Queries

When you need to Read from the DynamoDB, you have a few options:

- GetItem: read an item providing a primary key

- BatchGetItem: get up to 100 items, doing an GetIItem for each one.

- Query: read items with same Partition Key value (Owner in our case)

- Scan: read all the items in the table. The problem is that Scan gets the ENTIRE table, and then removes whats not needed… so it’s slower/

Read and Write Capacity Units

Provisioned Throughput is measured in Capacity Units (and the more you provision, the more you will pay). When you create a table, you need to specify Read and Write capacity units:

- 1 Write CU = 1KB Write per second

- 1 Read CU = 4KB strongly consistent, or 2 x 4KB Eventually Consistent Reads per Second

Alternatively, you can use ON-DEMAND pricing model, where you don’t have to provision Read and Write CUs, dynamo will automatically scale… so it’s perfect for workloads with unpredictable application traffic.

DynamoDB Writes To create, update, or delete an item in a DynamoDB table, use one of the following operations:

- PutItem

- UpdateItem

- DeleteItem

For each of these operations, you must specify the entire primary key, not just part of it.

DAX

DAX or DynamoDB Accelerator is highly available, in-memory cache which is optimized for DynamoDB. DAX currently only offers write-through cache. Elastic is supported for use with DynamoDB, however it is not specifically optimized for use with DynamoDB. CloudFront is a Content Delivery Network which caches content like files, web pages, videos etc, but cannot be used to cache Database data. Read-Replicas are used to scale read operations for RDS instances.

Data is written at the SAME time to Dynamo and CACHE. You query DAX cluster directly, and DAX performs Eventually Consistent Read from Dynamo.

DAX is not suitable for apps that require Strongly Consistent reads.

IMPORTANT: If your app needs to use LAZY LOADING - you need to use Elastic cache, cause DAX only supports write through.

TIPS for Troubleshooting

- ProvisionedThroughputExceededException means that your application is sending more requests to the DynamoDB table than the provisioned read / write capacity can handle.

- Each AWS SDK implements an exponential backoff algorithm for better flow control. The idea behind exponential backoff is to use progressively longer waits between retries for consecutive error responses.. If you’re not using AWS SDK, you need to implement this yourself.

Where to find more info

Relevant links:

Feedback

Was this page helpful?

Awesome! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.